Deciding what tech stack to work with can be an overwhelming decision due to the ever changing business demands, vast considerations and choices of platforms, the skills you can hire easily for and most importantly – the Cost.

As soon as cost is mentioned, often people think the answer is using open source – because it’s free, which is not an entirely true statement. Open source does not always mean your not investing anything – in many cases you might be investing the most costly asset- time of the engineers to build what open source platforms lack out the box, and managing team’s capacity is one of the most important aspect of software development.

There are a couple of dimensions to look at this problem from which all indirectly affect cost. let’s look at this from different perspectives to get a holistic view.

Building services

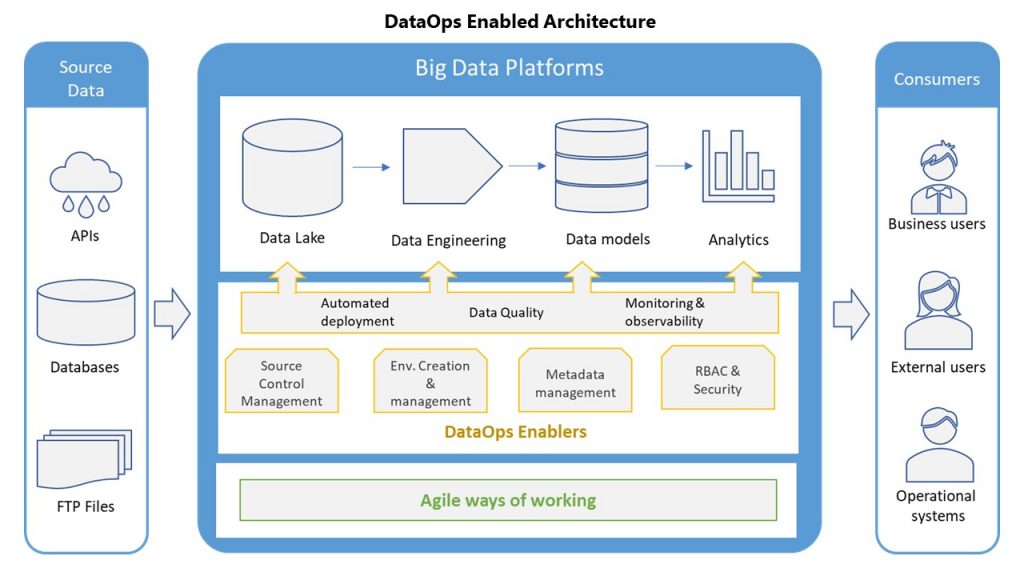

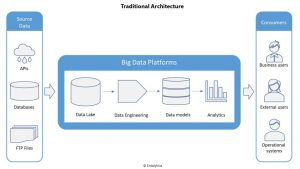

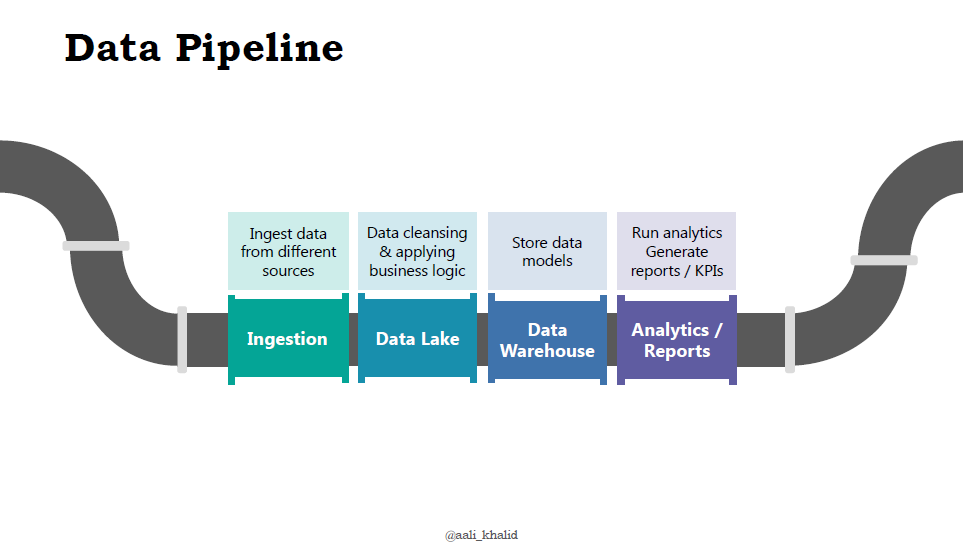

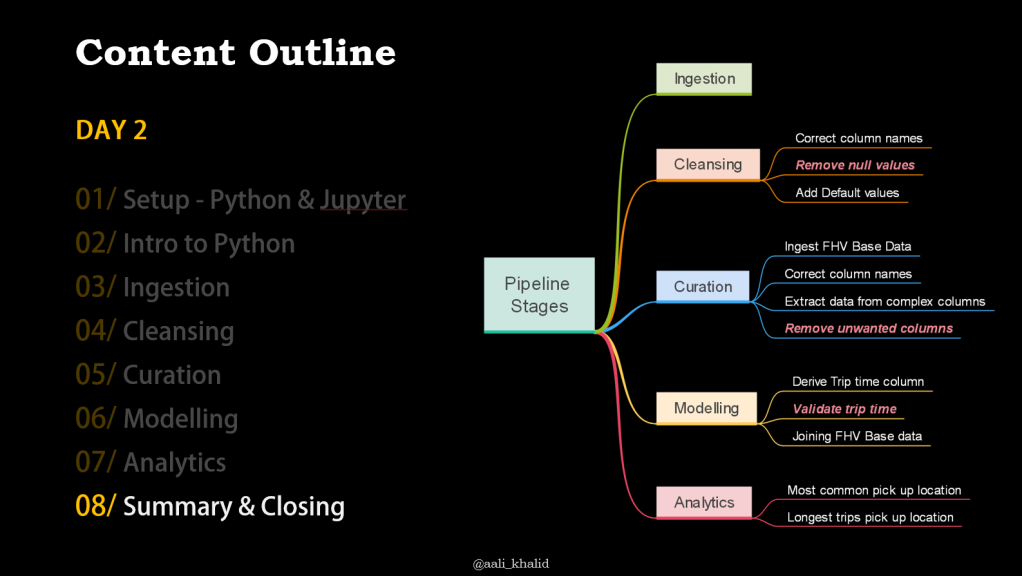

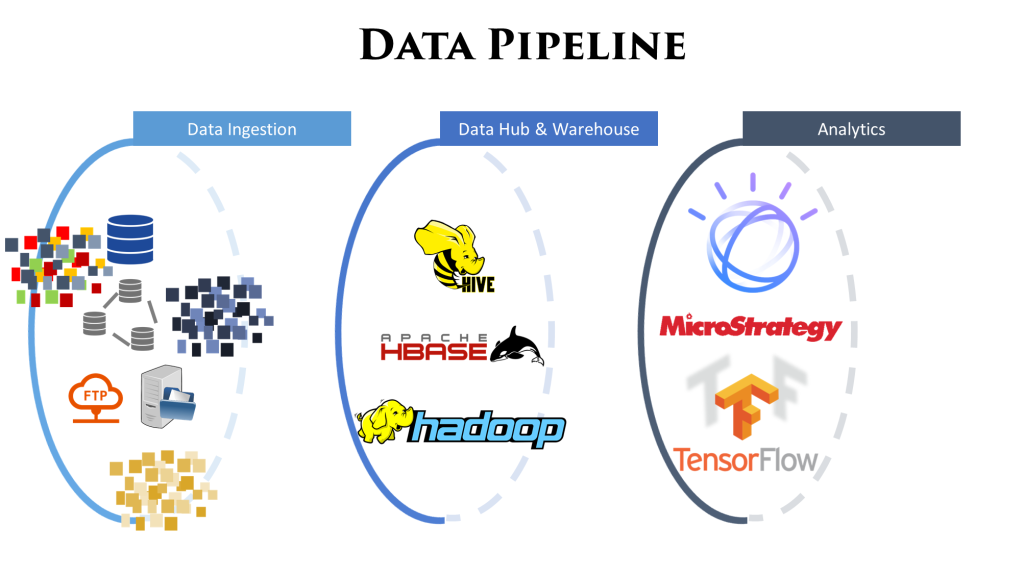

To build data pipelines from ingesting data, cleansing, curating and modelling – end of the day all that really requires just plain SQL to manipulate data. However to get to a point for us to write those SQL (like) queries we need to build a lot of underlying services for:

-

- Read data from storage locations (Databases, APIs, Kafka, FTP etc.) in different formats (SQL DBs, JSON, XML, CSV etc.) and save them in some distributed filing system (HDFS, ADLS etc.).

-

- For curation set configs, read data from different formats / locations in dataframes, perform cleansing and curating then saving back.

-

- Write lots of util functions for activities which are commonly repeated and UDFs (User defined functions), which are quite a few for data pipelines.

-

- Maintain these util libraries to support changes in underlying platform / libraries (e.g. Spark, configs, logging etc).

-

- Build added services on common utlis for other common activities like pushing data to other platforms, querying data from various locations across the data pipeline or serving through APIs for real-time use cases.

All of these activities take considerable time and effort to develop and mature, which can mostly be done more effectively using managed services, who have teams specializing in just building libraries and services on top of open source technologies.

Keeping up with releases

After building these services it does not end there. The underlying technologies are in constant flux, often the decided tech stack also keeps on changing as the demand changes and the industry evolves. That means maintaining these services is often a big piece of work too.

Furthermore, flakiness in the operation of these services has a direct impact on the analytics solutions being designed. Teams who choose to build these services on open source foundations often find a good portion production issues attributed to these services not working as intended.

Reusing common services

The activity of building these services on top of vanilla open source libraries is often not given it’s due importance. Engineers often don’t consider this as a significant piece of work – and easily end up writing their own logic for something which has been solved already.

A theme I have seen across multiple organizations is how much rework teams end up doing. Instead of reusing what some other team has done, they all end up writing their own ‘reusable service’ which is being reused only by them! This is a massive overhead and lots of unnecessary rework teams end up doing.

Ultimately it’s SQL

To get the insights and analytics we need – the core activity is writing SQL logic across the pipeline, which considering all the points mentioned above usually turns out to be a very small portion of the total effort. Most of the team’s energy is spent in managing and maintaining these services – and the most value to the business is coming from that small portion of time we invest in writing those SQLs.

Departmental Objectives

As discussed, building these services is a time consuming activity. Another dimension to look through is – what are your departmental objectives? As an analytics team are you tasked with generating insights for the organization? Or are you meant for creating a platform for business users to generate insights themselves.. And yes there is a big difference.

Generating insights

This is where most teams start from – business users want to get insights into their operations and start seeking help to generate those from a newly formed analytics team. As they see value, the number of use cases grow and so does the team. Depending on the adoption of data driven decision making practices, organizations can stay in the mode of still heavily dependent on analytics team to generate the BI reports for them.

In some cases analytics teams wise up a little and give access to creating some custom reports for business users also – freeing up engineers time from just translating a small request into a basic SQL query to join a few tables / columns in a view.

If this is where your team is today, then perhaps your primary job is to build data models and insights for your team. The part of building common services ‘to support’ this work is a distraction and not necessarily you want to spend more time perfecting yourself.

Truly self service

As organizations mature and business users catch up on being tech savy enough to query data from a data warehouse and build reports for themselves – you’ve started to move to self-service. By then it’s highly likely you are bringing considerable amount of data from various parts of the organization, meaning your compute resources are shooting up and using managed services may be offsetting the benefit.

At this stage – it may make sense for the core data engineering team to start building those common services and make that their primary job – since by now all they are concerned with is pumping new data sources into the data warehouse on a solid foundation, while rest of the organization has enough skilled people to generate reports and basic insights from data sitting in the data warehouse or analytics teams running ML models from the data lake.

Tech stack considerations

Another common reason for choosing open source and building services on top by the department is to have flexibility & portability – which is hoped to make the platform future proof. At first it does make sense, however there are some nuances to this.

Vendor lock

Technology, platforms and maturity of design practices are all changing at an incredible pace. Within few years the whole picture can change and the tech stack which was once considered top of the line quickly becomes legacy and sluggish.

In this climate of change, relying heavily on any one cloud vendor does not sit well with organizations – and rightly so. Portability is on everyone’s mind – and many mature organizations get stuck having to work with one vendor, and may end up paying significantly high costs just to continue business as usual.

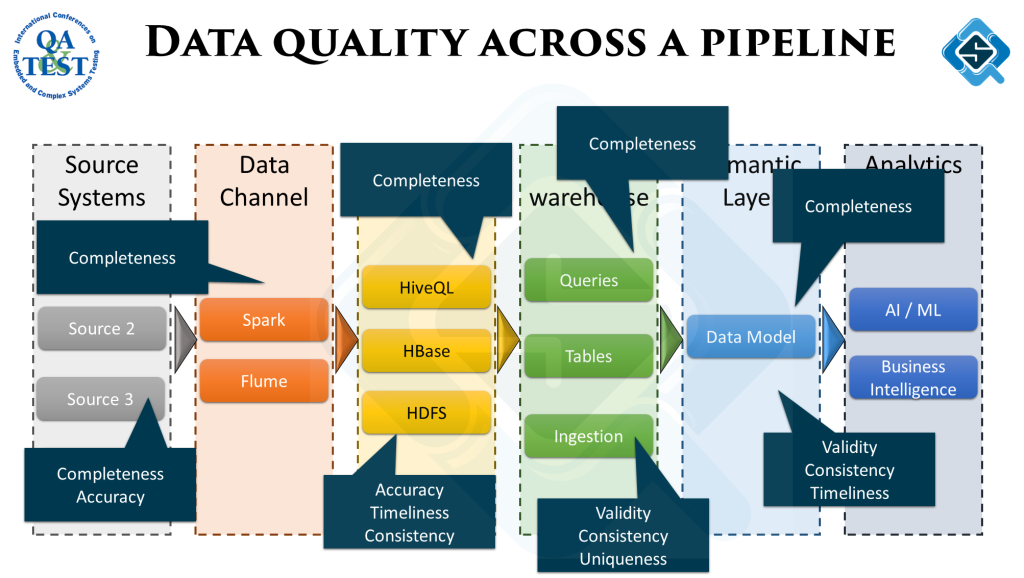

With open source, organizations hope they will maintain portability to allow switching platforms / cloud providers if the need arises. While it is true, e.g. if bulk of your pipeline is built with Spark – all platforms will support spark and make the move possible. However with data pipelines there are a lot more considerations then just support for e.g. Spark.

Across a typical data pipeline, there are a LOT of platforms you’d need integrations with. Plus a lot of peripheral services like scheduling, logging & monitoring, security / RBAC. Even if your main ETL pipelines are open source – there is a lot more going around which is not as portable and will have tight dependencies on the platform you are using. Many times I’ve seen teams struggle just to migrate from one version to the next – point being migration anywhere is going to be an overhead and painful process. Sure can get little easier if you use the right design principles, but still will pose a considerable challenge.

Future proof?

The question remains – is there a way to future proof our tech stack? The honest truth is its a balancing act, because no matter what tech stack you opt today – there is a very high chance it will need major upgrades few years down the line.

This is a sticky topic – but IMHO it is very hard to have a future proof tech stack. The aim should be to choose a tech stack which is scalable and has the capability to deliver features for the coming coming few years with very less maintenance.

Summary

Here are the summarized points to consider:

- Building services on top of open source platforms / libraries can take considerable effort to build and mature

- Maintaining these services is also an on-going activity

- Teams sometimes struggle to reuse services and end up building their own logic for the same function again – especially true for larger or distributed teams

- If the analytics department is primarily tasked with building insights, then trying to build these services will take way more time – taking you away rom the primary objective

- Open source does not mean switching platforms / cloud providers is going to be easy – data pipelines require a lot of integrations and peripheral services which are not always easy to migrate

Given the points above – it may make more sense for analytics teams which are early in their journey or are in the growth trajectory to use managed services instead of going open source. The cost of using managed service might not be as high due to the volumes – and the focus at that point should be adoption of data driven decision making instead of cost optimization.

Once an organization has reached a level of maturity and the analytics team is now primarily moving towards supporting self-service, by then the volumes can outrun the benefit of using managed services – making it more cost effective to build services on open source platforms.